by Alexander Görlach

It was a meeting of two AI giants: Elon Musk and Jack Ma discussed the future of the world in Shanghai last week. Will AI be our doom or our redemption?

Both tech billionaires have expressed different views. Musk, as it was expected, drew a rather bleak picture, whereas Ma seemed to not worry at all about potential negative implications AI may have. In his view, humans will adapt to the new realities. Musk, on the other hand, feels that mankind may be outmaneuvered by new technology as it is beyond our conceptual capacity.

However, it became more exciting when the two agreed: The need for AI to be capable of facilitating love, Business Insider reports. Jack Ma introduced the concept of a “love quotient”, LQ, (equivalent to the measure of IQ, Intelligence Quotient and EQ, Emotional Quotient) a while before the Shanghai summit. It seems that Ma tries to tackle and bridge alleged human particularity often expressed in terms such as creativity, mindfulness, or the soul and make it accessible to quantifiable measurements.

Indeed, in order to qualify an action as successful, let’s say an automated car ride, there may be factors deriving from this aforementioned particularity. What it means to feel safe in a car may not only be understood by the technology in place but also by the human sentiment of safety. For AI, in order to understand these factors, it would need to be able to “feel” safety too.

This, however, seems to be difficult to achieve (not impossible) as the origin of our sentiments and how they make us take crucial decisions in a heartbeat is not yet fully understood by neuroscientists.

What it means to feel safe in a car may not only be understood by the technology in place but also by the human sentiment of safety. For AI, in order to understand these factors, it would need to be able to “feel” safety too.

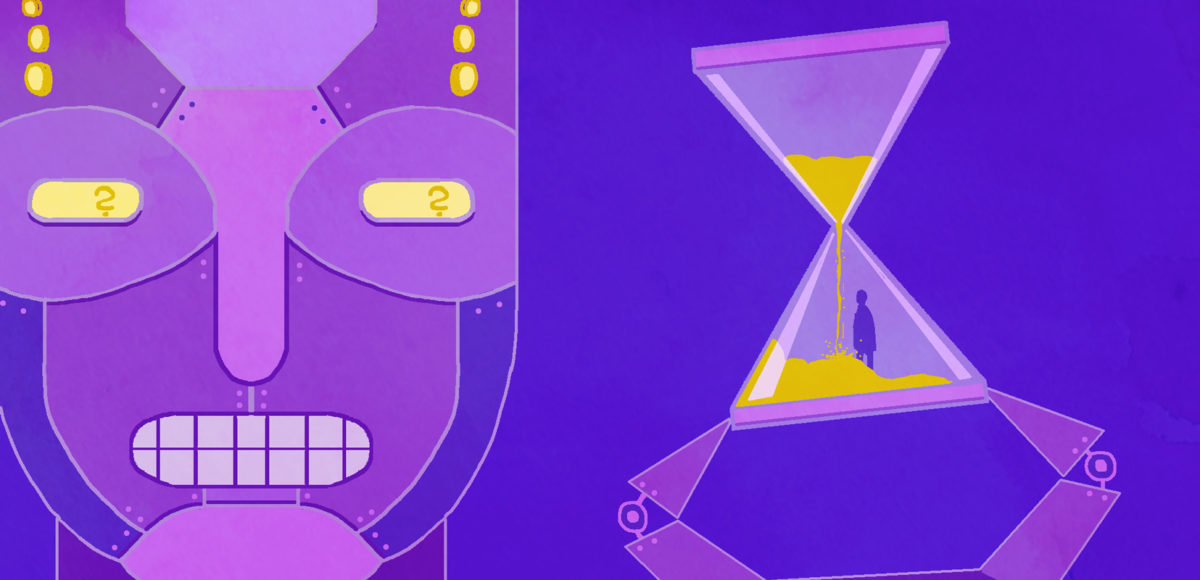

One human faculty, however, not mentioned and taken into account within the triangle of LQ, EQ, and IQ is the human capacity to make decisions on a trajectory, by understanding and living with a concept of time. Our temporality enables us to make decisions on the basis of previous ones. This decision making includes positive or negative experiences with people in a similar situation in the past. Man is a temporal being and as time is passing, so is his memory. Proponents of AI may say that algorithmically supported decision making would not have to take flawed memory into account.

Yet neuroscience tells us that it is not only a relief for the brain to forget things but also a necessity in order to remember the important stuff successfully. What is important, however, depends strongly on our preferences which alter and change over the course of our lifetime.

Man is a temporal being and as time is passing, so is his memory.

This brings us back to the point of temporality. Is there an “ethics of time”? Time is a factor in many equations in physics, so should it not become a parameter in AI as well? We do in fact have an “ethics of time”, as we speak about “time well spent” and something “not worth my time”. So the fruitfulness of our human endeavors is measured, amongst other things, by time. In terms of AI, one may have thought that by making decisions super fast the question of timeliness may have been arrested. But “fast” is not a measurement for quality, some would argue even to the contrary. We may be needing a “TQ”, a time quotient, as well in order to properly express LQ, EQ, and IQ.

That’s something for Jack Ma and Elon Musk to discuss when they meet up next, well, time.

| Technology, AI and ethics.

| Technology, AI and ethics.