by Adina Popescu

Nobody can predict the future, so beware of people that call themselves “futurists”.

Beware of documentaries, online videos, and stories that claim to know the future of technology and that tell you “how society will look like in 2029”, as all of us are creating the future – every day. There is no future except the one that we allow to happen.

I was born in Romania and was very little when my parents decided to escape the dictatorship of Ceausescu – and so they did, in a spectacular way. Unfortunately, it took them about 3 years to do so. While my mother escaped via Italy to Germany, my father and I were left behind in Romania.

One of my earliest memories is sitting in front of the TV, watching the one and only trusted news channel (fake news after all, is not an invention of the web. 2.0). I was watching my ex-dictator speak, only for my father to rush in and switch off the TV. He got mad at me because I was looking at Ceausescu with the loving eyes of a child who sees Santa Claus for the first time. This was because the state kindergarten for ideological reeducation that I was forced to attend (my mother was a ‘traitor’ after all for leaving the country) taught me that Nicu and Elena Ceausescu were larger than life saviors and my best friends.

But of course, as a young girl of four, my father was a king too and he was always right. So when he told me that Ceausescu was our worst enemy and that he was the reason why we were unable to see my mother, I experienced my first conceptual dilemma. I remember feeling my brain cracking in half, having to decide between two truths: against the ideology of the institution that was keeping my soul hostage – and my hero, my dad.

I decided to believe my dad, and in the next phone call to my mom, I said: “Mom, the evil people are not allowing us to come and see you”. Of course my parents were in shock after I had said so over the phone, knowing that our conversations were all surveyed. During that time surveillance was not the sexy and elegant surveillance of modern day Silicon Valley tech; it was the clumsy analog clicking in a bulky phone.

After huge personal sacrifices, we did not make it to the US after all – but to Germany. Never mind, both was “the West” and it was glorious, or so I was told. I was also told that what we experienced with the securitate in Romania would never happen again in the free world.

When I later moved to New York, I was given the possibility to continue my studies in Philosophy. Obama had just been elected, Snowden was not in the picture yet, no one knew about the back doors in the encryption keys of our digital sites and life was good.

Only slowly did the transition start – I could feel, every time I passed the security check at an airport that something was changing. News popped up here and there, the understanding that with social media we had all embarked in a deal with the devil – and Kim Kardashian came into the picture. This is when it became crystal clear to me that something had changed.

The culture, even in Berlin changed. What was dominated at first by peer-to-peer communities and artists was slowly turning into the wonderfully clean space of start-ups, all trying to crack that one algorithm that identifies a handbag in a video, tags it, and links it back to an online shopping site. Everyone hoped to be the next Instagram – to be bought by Facebook for 1 billion with only 13 employees at hand.

Yes, we will lose most of our jobs to AI and secondly, climate change is real and there will be mass migration. So what are we going to do about it?

There is a problem in our understanding of innovation

This brand new space of the utter digitalization of our lives and our social and human interactions brings up a problem. Especially in the past 5 years we have slowly seen our trust in institutions eroding, creating a social trust vacuum. This trust vacuum is a subtle, soft, and dangerous existential crisis for us and our democracies. Relativism has taken over, making people feel inadequate to decide which cause is worth fighting for, which news story is true, what is important, who to donate money to, all while wondering how our actions and voice still matter.

The big promise of the Internet was to grant all people access to the information needed – from public legal documents, research papers, and trusted news sources – in order for us to be able to act as informed sovereign citizens. Unfortunately the web 2.0 is structured in a way in which every object exists as an infinite copy. Huge mountains of raw data can be kept in corporate and governmental server silos, which in return can and are being used by ad- tech and political campaigns to influence citizens. There is something deeply undemocratic in the way of how we handle and monetize big data, which inadvertently leads us to the question that Jaron Lanier, the father of virtual reality asked:

Who owns the future?

How can we upgrade the design of our current digital networks into something that is more in line with our human rights and democratic values?

The shared space of military technological innovation with consumer gadgets and media is highly problematic. If the design of your system architecture is a-priory pan-optical built for gathering classified, centralized intelligence, and surveillance, so is the society that you are creating. There is an intrinsic relationship between the technological advancement of a society and its military spending – as well the problems that arise from this understanding of societal “development”. For instance, with Google almost launching a censored search engine for the Chinese government and developing killer A.I. for Pentagon drones at the same time. So our question remains:

What is innovation? How do we want to innovate in order to create the future that we actually want live in?

The social media and search engines that we use today are at their basis pan-optical. It takes a strong government to constantly regulate them and it only takes a slightly different government, like the one in China, to bring out its pan-optical qualities a little more. I am referring here to the Chinese social media credit system, realizing the terror of an Orwellian surveillance state, just by using social media and high security tech as tools of oppression.

The trouble is that what China is using, is based on the same architecture and ‘best practices’ that we are using. All it takes is an all-powerful government, or a “threat to our security” to turn this ready built panopticum against its own people.

So, shouldn’t we design a systems-architecture that is more in line with our democratic values? History has shown that we cannot rely on anyone not to take advantage of something that is so freely given; which is the innocence of unprotected, beautiful raw data that allows for entities to draw an immense intelligence from. And of course, this data is then monetized and sold to 3rd parties, who in return use its analytics to influence its public.

To me there is no structural difference between taking people’s private data for free and drawing intelligence from it in order to sell the masses a shoe via customer targeted advertising – or by using the data to sell the masses off to a new presidential candidate. (Cambridge Analytica comes to mind). One may have led to the election of Trump, the latter to the total commodification of our bodies, our personal lives, and relationships.

Final Fantasy

Due to my work with virtual and augmented reality systems I am very well aware, that we are absolutely able to gain immense data on our users behaviour patterns and into their most unacknowledged desires. One could then easily generate a character that looks like the users crush, but is instead an ambassador for a famous sneaker brand. I realized that Philip K. Dicks’ The Minority Report was not too far off here (maybe 5- 10 years in) and this is where I drew a line for myself and decided that we needed to combine the XR space – in fact any space that deals with machine learning reading data drawn from unsuspecting consumers – with secure encryption protocols, and to make sure that identities are unique, private, safely stored, and that the emulation of public or private characters can not be misused.

There is this narrative of “inevitability” in the dystopias of Hollywood’s Sci-Fi movies, as if there was a Hegelian teleology at work in history, that would lead us inevitably to a world anchored in the dystopia of a surveillance state – a world of societal regulation via commerce and corporations while robots automate our jobs.

Only two things hold true in all those predictions. Everything else, I believe is up to us. Yes, we will lose most of our jobs to AI and secondly, climate change is real and there will be mass migration. So what are we going to do about it?

Changing to a data democracy

There are two main timelines to draw from the point in which we stand now. There is the one in which the intelligence that we draw from big data will be governed by multinational hybrid structures and governmental bodies, or the one in which we manage to correct the failures of the web 2.0 by making sure that web 3.0 lays the foundation for a more democratic, egalitarian, and clean future.

I am all for machine learning. There is no way that we will be able to draw predictive analysis in the same way a super intelligent computer can. For example, machine learning can analyze masses of raw data so that we can securely predict weather behaviors and the effects of climate change. By using computer vision we can extrapolate information on biodiversity, toxicity, and emissions from a micro-satellite that the human eye cannot.

My point is only: who owns this knowledge and will this knowledge be open or only available to just a few? It is easy to deny climate change if the research is no longer fully available or skewed.

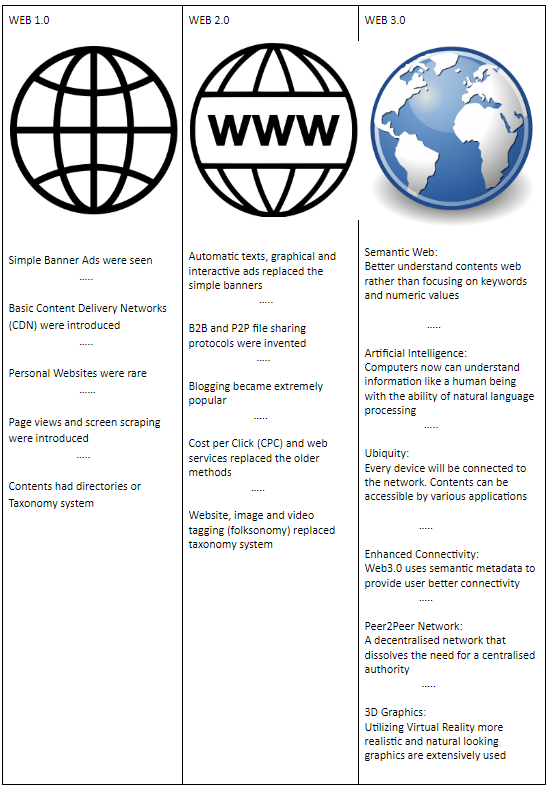

The next Internet: Web 3.0

Many view the Web 3.0 as a combination of artificial intelligence and the semantic web. The semantic web will teach the computer what the data means and this will evolve into artificial intelligence that can utilize that information. In its most democratic version, the Web 3.0 will be a crowd sourced, peer-to-peer governed semantic web in which every person has control over their own data and the intelligence drawn from that data will have a shared, mutually beneficial value to society. Only if everyone has the same access to knowledge can we collectively work on our global problems at scale.

There is already a lot of work going into the idea of a semantic web, which is a web where all information is categorized and stored in such a way that both computers and humans can understand it. The semantic web embraces data which is open and linked, where no object exists twice, our identities are private and secure, and a peer-to-peer, decentralized network dissolves the overbearing power of central authority. Sir Tim Berners-Lee, the father of the World Wide Web and creator of HTTP is currently working on his project Solid, which will lay the foundation of such an upgraded, more secure, user-protection driven Internet.

A vision for 2029 includes creating an Internet of shared value: a digital world where people will be connected in a way that allows them to be more humane, where they are incentivized to work on mutual goals in unison, as we all start to understand that we are all sitting in the same boat (or the same planet).

Create dApps instead of Apps!

It is time for everyone to start creating dApps instead of Apps. dApps are decentralized apps that allow for co-ownership in the system that we are all participating in and that we generate with our data and our content.

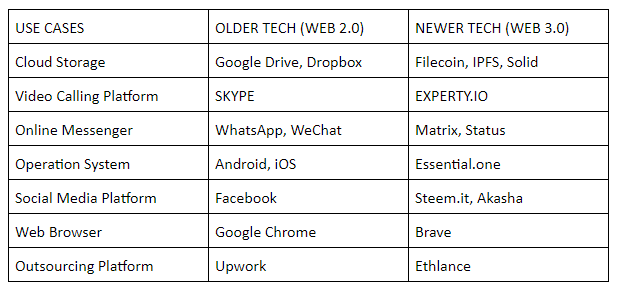

Here are just a couple of Web 3.0 examples. There will more dApps that will replace all the big names of today. Naturally, these dApps will have better, more advanced features compared to the previous apps. Only time will tell if our decentralized networks are strong enough and can scale.

In order to understand how to monetize a network that is free of server silos and the collecting and selling of intelligence derived from other people’s data, we will need to start looking into heterotopic marketplaces and digital currencies 3.0. If we want to gain more control over our privacy and our own data while keeping access to knowledge open, we will need to come up with more creative marketplaces, businesses, and monetization models than data analytics.

The future

A vision for 2029 includes creating an Internet of shared value: a digital world where people will be connected in a way that allows them to be more humane, where they are incentivized to work on mutual goals in unison, as we all start to understand that we are all sitting in the same boat (or the same planet). The Internet that connects us all will be more user-centric and so will the products that we use. Consider user-centric medicine and products instead of user-centric advertising. The perk: everyone will have an income from the participation in one or several digital economies – which is a great solution to universal basic income.

When asked how to change our education in a future in which kids will have to compete with AI for jobs, co-founder of Alibaba, Jack Ma answered: “We can not teach our kids to compete with machines. We have to teach them something that makes them smarter than a machine – which a machine cannot achieve. In this way our kids will maybe have a chance 30 years from today. Moving away from knowledge based specialization, we need to teach them value, independent thinking, teamwork and creative thinking.”

However, a glance at our brand driven social media sites tells us that critical thinking is not exactly encouraged. If we keep turning our kids into data-producing consumers, we will lose this battle. I would add coding to Jack Ma’s list, as not knowing the language of the digital space, will be the analphabetism of the future. The more we empower the coming generations to understand what they are actually participating in and agreeing to, the more we have a chance to protect the idea of a sovereign citizen and to protect humanist values and basic human rights in an increasingly automated world.

As Jaron Lanier said, “You are not a gadget.”

| Technology, AI and ethics.

| Technology, AI and ethics.